It seems clear that AI will be a major platform shift and perhaps the most significant one in the history of software. Machine learning has already given those who can harness it unprecedent levels of insight. Generative AI promises to turn those insights into action. In a B2B software industry defined by efficiency this is something like the Holy Grail. There is lots to be excited about in this shift but it requires totally new ways of thinking about moats, product, and building companies. As we have tried to get to those new ways of thinking we have become particularly excited about industry brains: AI first businesses able to combine customer and 3rd party data to create an intelligence layer for traditional industries, in particular logistics, healthcare, law, and manufacturing.

B2B software today

A reasonable definition of B2B software is an attempt to improve business efficiency by taking a bunch of processes and turning them into code. ‘Efficiency’ here can have many faces: individuals doing their jobs faster and cheaper; teams working better together; management having a better understanding of what’s going on; and so on. The goal is to get as close as possible to total automation and transparency.

No single piece of software can cater to every process and the result is that businesses are run on a software stack that ranges from the generalist (e.g. Microsoft Office) through to the highly specialized (e.g. Epic and Anaplan).

The industry progresses on two axes. The first, mostly driving generalist software, is platform shifts: a new style of computing arrives that is sufficiently better than the status quo to cause widespread business adoption and sufficiently different to give opportunities for new generalists. Classic examples of this are the move to desktop, emergence of the internet and mobile, and the development of enterprise cloud. While incumbent generalist software providers often adapt and acquire their way through platform shifts these are the best moments for huge new generalist software businesses to be born or mature, with Salesforce and the shift to cloud being the best modern example.

The second axis is verticalization. This mostly drives specialized software and comes from a combination of computers and computation pushing ever further into peoples’ lives while the cost of building software keeps falling. Entrepreneurs find industries with a stack of generalist software products and sufficiently similar processes between businesses that a single purpose built product could be superior, better yet if there could be some payments handling or a marketplace thrown in.

These axes cut across both enterprise and SMB, though it is fair to say that there tend to be more SMB opportunities in verticalization and that most generalist products need to get to enterprise for real scale. Most of the startup action in recent years has been in verticalization because we have not had a major platform shift since cloud and incremental progress favours generalist incumbents. AI, of course, changes this.

Dude where’s my moat?

AI is the next platform shift and is perhaps the most significant one in the history of software. This is not just because of the potential power of AI but because it is a totally different way of thinking about software. Where in traditional software you identify a process and turn it into code, with AI you try and learn a process and turn it into an automated action. The moats involved are very different.

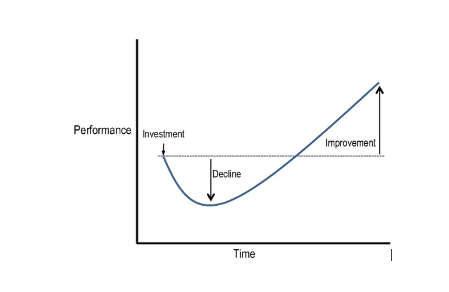

Because B2B software is all about efficiency it is best to think about moats in terms of an efficiency or performance J curve. The dotted line below is the customer’s current level of efficiency . They buy software to improve efficiency but have to accept a short term performance decline as systems are migrated and staff get used to working in a new way:

B2B software sales come from convincing customers that the short term decline will be worth the long term improvement. B2B software moats come from making the J curve for switching away from your product as deep as possible. This tends to come from the interaction of data and workflows combined with business criticality.

The data side is intuitive and much discussed: shifting data is a pain and the more of it and the more critical it is the less likely a customer is to switch. So a recruitment tool might have a shallow data Jcurve (a few quarters of data you could in extremis run your business without) while a CRM has a very deep one (potentially years of the most important data in most businesses). Even as customers come to expect API access, expect data to be stored away from the application, etc, this remains an important switching cost.

The workflow curve comes because a software company does not just turn a process into code, in the workflow it turns the way that people work into a set of inputs that a computer can understand. This is the real art of software design: workflows that are sufficiently intuitive that they are easy to adopt but sufficiently extensible that mastering them becomes part of how a user demonstrates economic value.

Where data and workflows combine there are almost insurmountably deep J curves, as with Salesforce that deals with long lasting, critical data, and an army of power users whose productivity is tied to their ability to squeeze the most from Salesforce workflows. And beyond the moat, workflows are the beginning of virality: ‘proficient at Microsoft Office suite’ may have become a punch line, but it is probably the widest moat in tech, no data required.

AI is completely different because learning followed by action is much less dependent on workflows than turning process into code. Indeed, the key UI feature of ChatGPT has been that it is workflow-less, just ask it what to do and keep iterating your questions until you get to a desired result. It seems unlikely that long term a question and answer style interface will be the gold standard of UI, but nevertheless much of what is now powered by workflows will in AI products be powered by algorithms.

With AI businesses covering much of what was done by workflow with algorithms, and able to draw data via API rather than requiring migration, the old software J curve is gone. The moat for AI first businesses will be in their algorithms/models, their proprietary data, and their inventiveness in employing these.

Where we are today

So far we have seen incumbent generalists incorporate AI to try and get ahead of this change (e.g. Intercom, Microsoft, Github, even Bloomberg and Iron Mountain). Over the coming months we will start to see ‘AI first’ challengers come for those incumbents as they did with cloud. And in due course we will see it in the verticals.

There’s lots to go after here, and much debate about whether it favours incumbents. Rather than getting into that, we have been thinking about what can be done now that could not be done before. And what has really excited us is the cohort of companies building an intelligence layer on top of existing software to become an industry brain.

AI as industry brain

The AI as industrial brain companies look to take data out of a company’s existing stack and combine it with 3rd party data and/or proprietary data sources of their own. They then use machine learning to derive insights and, increasingly, generative AI to drive actions.

To some extent this is a generic description of the route to market for AI first businesses. Rather than go through the J curve replacement discussion with customers they will look to sit on top of existing systems and provide new insights and actions. Their long term goal will be to turn CRMs, ERPs, etc into commodity suppliers of data for their more powerful AI solutions. Industrial brain businesses, though take this further because by being industry focused the benefit from data network effects.

These network effects come because, within the same industry, and algorithm designed from company N can have immediate benefits for company N+1 and vice versa. Furthermore, by focusing on an industry industrial brain companies can be smart about bringing in third party data and building proprietary data sets. This begins with algorithms but over time we will see industry and sector specific models that take the data moat and network effect even further, leading to industrial brain companies ‘owning’ an industry, reaching a tipping point after which they have an unassailable data lead and become the standard.

To become a platform is of course the dream of any software business and notoriously hard to do. But with the platform shift to AI the opportunity is there: by combing ML derived insights with generative AI derived actions and putting them into company, industry, and macro context AI first (and particularly industrial brain) companies are natural platform plays.

Examples of the brain

The more complex a business’s set of inputs the more the context that AI can provide and so the higher the chance of becoming an industry brain. We think that our portfolio company Altana is becoming the brain of global trade and that there are big opportunities in logistics, healthcare, law and manufacturing.

Using law as an example, in cross-border M&A complexity rules. There is a whole suite of documents which need to work together and bankers, lawyers, accountants, and advisors, in multiple jurisdictions, all inputting into them. While the most important terms (who gets paid what, is there a remaining minority stake or earn out, what are the associated rights and responsibilities, etc) will have been agreed at a principals level much is not. In particular the mechanics for what happens if there are disagreements and what each side is representing and warranting need to be worked out in detail. The topic sounds somewhat dry and technical, indeed it is both, but gets very visceral when things go wrong.

While some of these details are determined by the relative negotiating strength of the parties the starting point is always ‘what’s market?’ i.e. what has been done in similar transactions? Currently ‘market’ exists in the minds of experienced lawyers and in their firms’ precedent documents. But the true ‘market’ exists in all of the transactions that other lawyers at other firms have done so the process is always squishy and many calls and turns of documents wasted.

There is room for a product that trains across law firm precedent banks, client precedents, as well as third party data like Lexis Nexis, court filings, and wire reports of out of court settlements. Such a product could, for any of these detail clauses, give a ranking on criticality and likelihood of being part of a dispute. It could then show whether the current position is better or worse than market, better or worse than the client has gotten elsewhere, and, given an estimated relative strength of the parties, the chances of improving it.

In use that might look like “2 of the representations in the Share Purchase Agreement are off market, critical, and a common source of dispute. It will be hard to move these on relative strength alone, so here are some less critical rights in the Shareholders’ Agreement where you are better than market and might want to trade. Here are sample drafts, emails for the other side, emails for you client explaining the changes.”

The immediate sell is just pure productivity: get your lawyers working on the clauses that really matter to your clients, and where there are issues focus on those straight away rather than just churning through drafts. The long term moat is that with every new law firm or other relevant data source the product gets closer to being the standard for what market is and harder and harder to replace.

How do you build a brain?

Building software companies, whether traditional or AI, is a difficult proposition. But building for industry brains is differently difficult in at least three ways: they require teams with a combination of technical and deep industry knowledge; big, conservative customers need to trust them with their most sensitive data; and they will need to martial serious amounts of capital.

The moats for AI first businesses will be derived from data. This means that, while you might be able to start with an app built on top of a foundation model, eventually you will need to at least fine tune if not go to your own industry or sector specific models. To do this will require highly competent technical teams paired with people who know an industry well enough to curate the right third party data sources and get to insights that customers really need.

The kind of customers that industrial brain companies will need to partner with are highly sophisticated but often not tech first. They will need to trust that their data will be used to derive value but will not fall into the hands of competitors. Convincing them of this will not be a simple task.

Marshalling capital will be particularly important. No doubt the costs of training and inferring from LLMs will fall, and AI savvy engineers will become more plentiful. But everything we see so far indicates that LLMs get better with more data, more parameters, and more compute. All of this means more money and all of this must be done without the playbook that has been developed for Saas.

OMERS and the industrial brain

Becoming the intelligence layer for a significant proportion of global GDP is of course a hike that’s worth the view, but it is a hike in uncharted territory and not for the feint hearted. The ideal capital partner for the journey would have deep pockets, a reputation for probity, and contacts throughout traditional industry. It will shock you to know, dear reader, that OMERS is just such a partner. We’d love to hear from anyone building in this space. Feel free to connect with me, Henry Gladwyn or Namat Bahram.